Cache

In order for a program to work it will, inevitably, have to load information from main memory (RAM). As memory runs a lot slower than the processor we get to a state where the CPU will end up waiting for main memory to fetch data or instructions. This essentially results in wasted clock cycles. The efficiency of the system will reduce as a lot of time will be wasted due to the speed mismatch between memory and the processor.

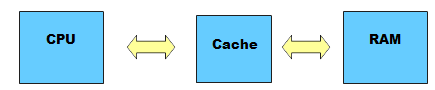

Caches help solve this problem by acting as a middle man between the processor and the CPU.

The cache is essentially just memory but it runs much quicker. Data and instructions which are used often are copied into the cache. As such when the CPU needs that data then it can be retrieved much quicker. Cache memory is small as the cost of cache is very high. It would not be cost effective to create main memory using the same technology as cache.

The CPU will always work from the cache. If the data it needs is not in the cache then it is copied over from RAM into the cache. If the cache is full already then the least used data in the cache is thrown out and replaced with the new data. There is a special algorithm used to ensure that the cache contains most of the data the CPU will need. This is a lot easier than you might expect due to the 80/20 rule –

Programs tend to spend most of their time in loops of some description. As such they will be working on the same data and the same instructions for a lot of their execution time. This is why caches can dramatically speed up a computer system. Simply the loop code is copied into the cache and will stay there until the loop has finished.